Using OpenAI Batch API to Cluster SEO Keywords and Cut Costs by 50%

OpenAI offers a “Batch API” that allows users to send grouped requests at half the usual cost. This feature is particularly useful when you don’t need immediate answers and is ideal for tasks that can wait up to 24 hours.

The API was released last month, in April, and I’ve noticed many people aren’t aware of it yet. However, it’s super helpful and affordable. There are no extra requirements to use it; you just need the same OpenAI API key you already have.

Why Use the Batch API?

There are many use cases where immediate responses are not necessary, or rate limits prevent executing a large number of queries quickly. Batch processing jobs are often helpful in scenarios such as these. Here are a few examples in the marketing industry that I could think of:

- Clustering SEO Keywords: if you have a large list of keywords you want to group and usually hit the limit, you can make a batch request with multiple splitter requests.

- Product Feed Optimization: Say you want to generate new titles and descriptions; if you have thousands of products, a batch API is the best use case here.

- Document Summarization: Summarize large documents easily when immediate output is not required.

Supported Models

The Batch API works with pretty much all of OpenAI’s models, including popular ones like GPT-3.5-turbo-16k and GPT-4-turbo. You can find a comprehensive list of supported models by checking OpenAI’s official documentation.

How to Use the OpenAI Batch API?

It’s actually quite simple; you just need to prepare a batch file in JSONL format. In this article, I will give an example of clustering SEO keywords, I will focus more on the technical aspect of things, rather than the prompt. We will use pretty simple prompt in this example:

prompt = "Cluster the following keywords into several groups based on their semantic relevance. Present the data as a table. Return the original keywords along with a new column called 'cluster'"

Let’s say you have over 150,000 keywords you want to cluster. We can split this into two requests since the model we will be using GPT-4 turbo, has a context limit of 128k tokens.

We need to create a list of requests, where each request is formatted as dictionary containing your SEO keywords. You can provide the keywords in any suitable format; just make sure they are separated by a character like a comma or pipe, so the model can distinguish between keywords.

requests = [

{

"custom_id": "keyword_cluster_1",

"method": "POST",

"url": "/v1/chat/completions",

"body": {

"model": "gpt-4-turbo",

"messages": [

{"role": "system", "content": f"{prompt}"},

{

"role": "user",

"content": "Here are the keywords: ['SEO', 'optimization', 'Google ranking']",

},

],

},

},

{

"custom_id": "keyword_cluster_1",

"method": "POST",

"url": "/v1/chat/completions",

"body": {

"model": "gpt-4-turbo",

"messages": [

{"role": "system", "content": f"{prompt}"},

{

"role": "user",

"content": "Here are the keywords: ['backlinks', 'page authority', 'domain score']",

},

],

},

},

]

Note that you need to provide each request with a unique ID in the custom_id key.

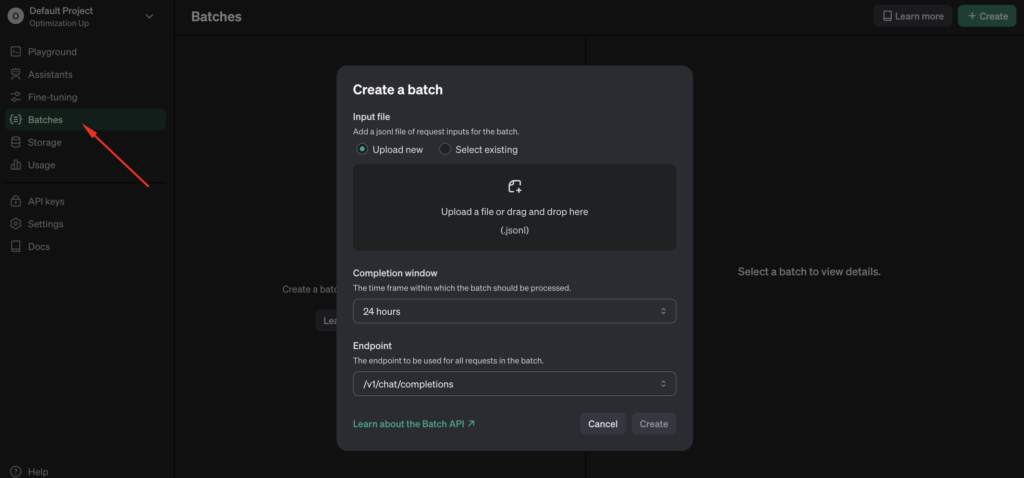

If you don’t feel like using the API to upload the file, you can use the UI actually to upload your files. It’s quite simple. You can visit the playground and fill in these details.

Convert to JSONL and Upload

Now, convert your data to a JSONL file and upload it using the file API to obtain a file ID. This process is straightforward. First, ensure you have installed the OpenAI package using the command below:

pip install openai

Now, let’s convert the Python dict to JSONL and make a POST request to upload the file:

import json

from openai import OpenAI

client = OpenAI()

with open('seo_keywords.jsonl', 'w') as file:

for request in requests:

file.write(json.dumps(request) + '\n')

with open('seo_keywords.jsonl', 'rb') as file:

batch_input_file = client.files.create(file=file, purpose='batch')

Submit the Batch

To submit the request, we just need one line of code:

batch = client.batches.create(

input_file_id=batch_input_file.id,

endpoint="/v1/chat/completions",

completion_window="24h",

)

Note, that the completion window can only be set to 24 hours for now, but OpenAI may allow different intervals in the future.

Check the Status and Retrieve Results

After we successfully submit the request, results are usually available within 12 to 18 hours. You can check the status and download the results as follows:

status = client.batches.retrieve(batch.id)

if status['status'] == 'completed':

output = client.files.content(batch.output_file_id)

print(output)

The Batch API is a cost-effective tool for managing large-scale SEO tasks like keyword clustering. Processing data asynchronously, saves time and reduces costs, proving to be a valuable asset for digital marketers looking to optimize their SEO strategies efficiently.

Entrepreneur focused on building MarTech products that bridge the gap between marketing and technology. Check out my latest products Markifact, Marketing Auditor & GA4 Auditor – discover all my products here.